Crafting a Brand Voice for Conversational AI

When Automation Meets Empathy

Subtitle: Strategic Voice Design for Conversational AI

Short Description: A brand-driven voice strategy for an enterprise AI assistant that turned a cost center into a culture connector—reducing support tickets by 80% while building trust through humanized tone and design.

The Strategic Opportunity

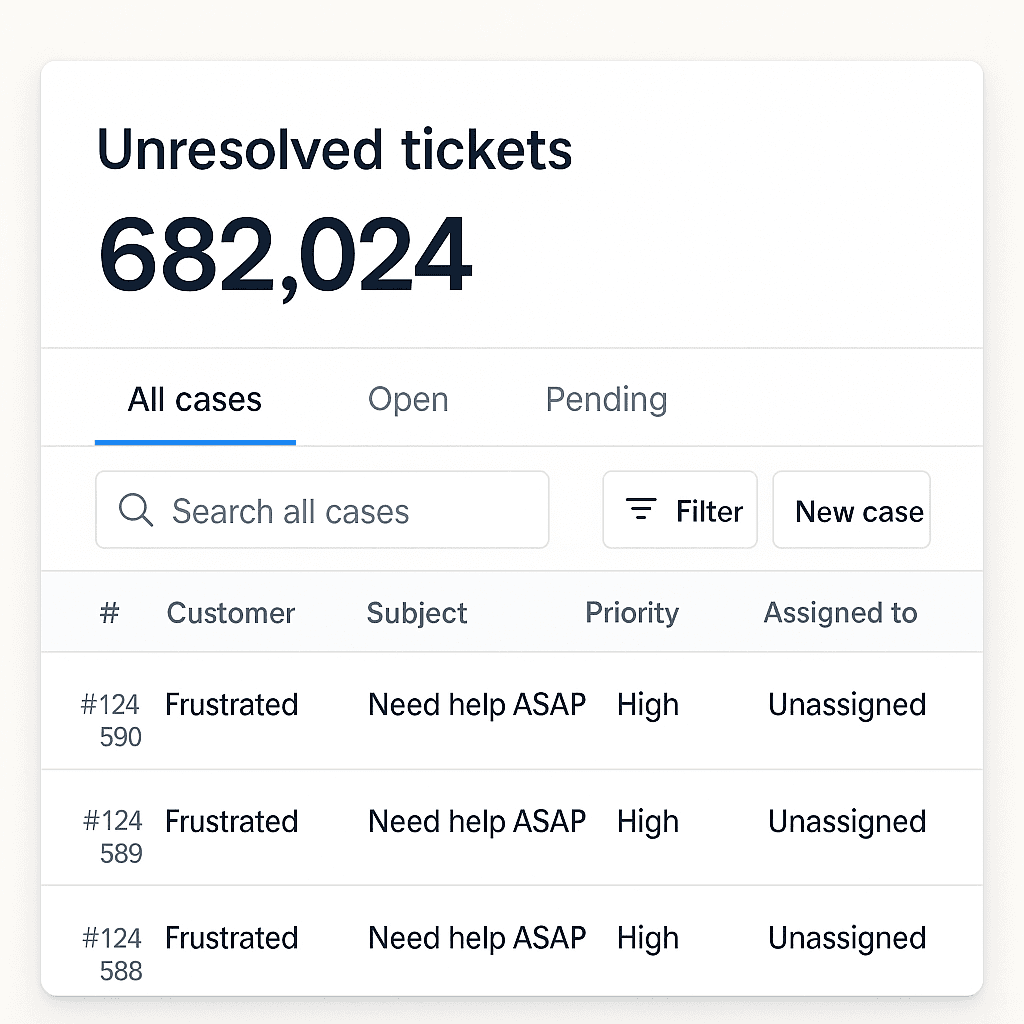

Technicians were drowning in tickets they shouldn't have been handling.

Sellers constantly reached out to tech support for basic software questions—password resets, simple navigation, routine troubleshooting. What should have been quick self-service was creating bottlenecks that prevented technicians from focusing on complex, high-value problems.

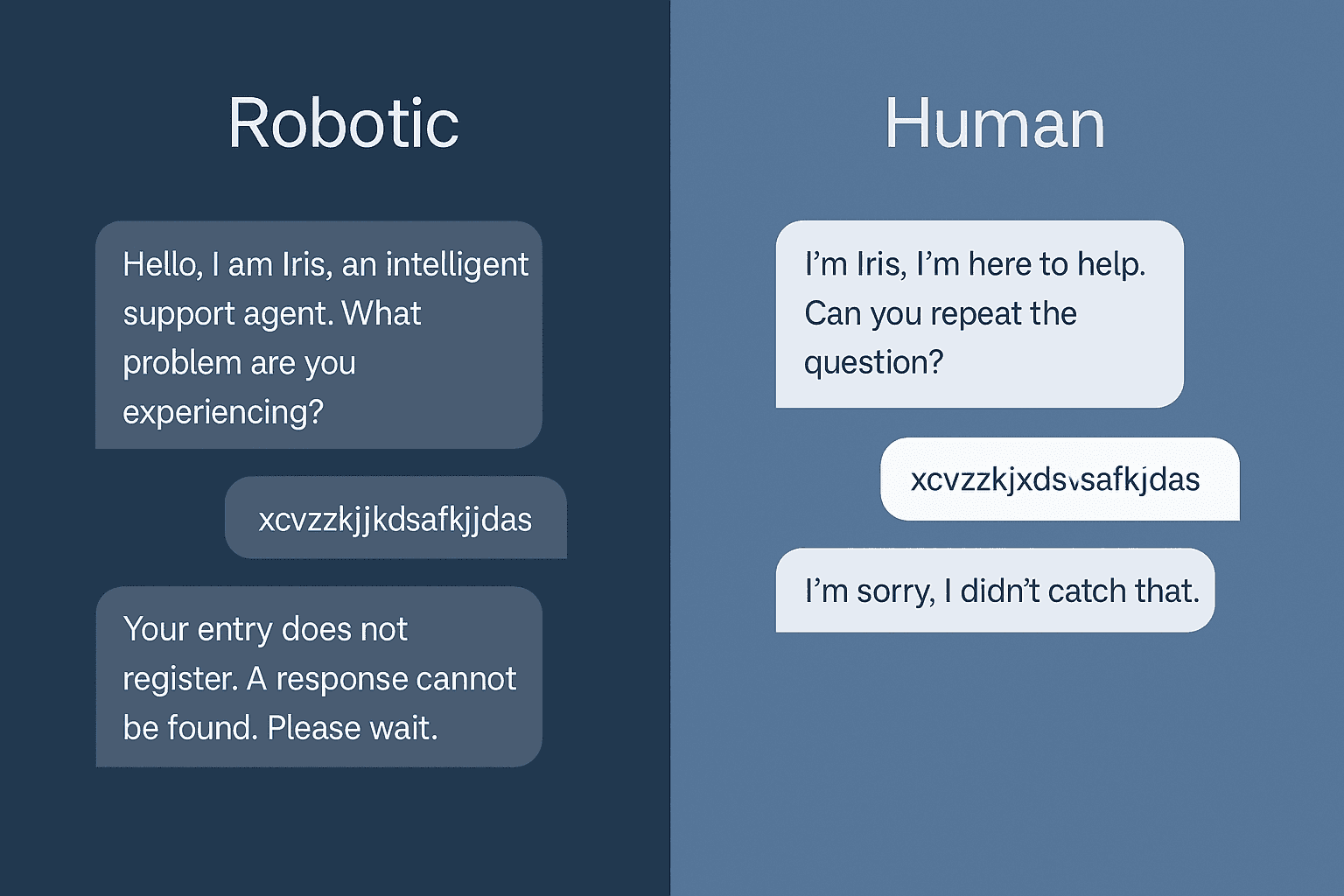

The company launched a conversational AI to deflect these tickets, but early iterations felt robotic and distant. Users avoided the system, defaulting back to creating support tickets.

The real challenge: How do you make an AI assistant feel trustworthy and human without being fake?

This wasn't just a chatbot project. It was a moment to shift how people felt about automation entirely.

This insight led to a creative platform that reframes the entire automation conversation:

“

The best automation doesn’t replace human connection—it enhances it.

Tagline: Support that feels human, even when it’s not. Positioning: Iris is not just an AI assistant. Iris is a voice-first brand ambassador, blending clarity with warmth to make automation feel personal.

The Insight That Started Everything

The biggest risk wasn't sounding robotic. It was losing trust.

Most AI voice projects focus on efficiency—faster responses, fewer escalations, cost reduction. But I realized something crucial: if users don't trust the system, they won't use it. And without adoption, even the most technically sophisticated AI fails.

The insight that changed everything came from watching user behavior during early testing. People approached the AI with hesitation, tested it with simple questions, then abandoned it at the first sign of confusion.

This was a brand moment hiding inside a help ticket.

My Creative Development Process

Our aim was to improve the conversion of the client’s landing page by completely reworking the copy. We couldn't change the design which set strict limitations regarding character count.

My Creative Development Process

Strategic Reframing

I approached this project like a brand problem, not just a UX one. The voice wasn't just delivering answers—it was shaping how people felt about automation in the first place.

Research Through Empathy

I ran design thinking workshops with stakeholders and users to understand not just what they needed, but how they wanted to feel when getting help. What I discovered: emotion showed up early—in confusion, hesitation, and frustration.

Innovation in Testing

Instead of traditional user testing, I developed a breakthrough methodology using Microsoft Teams. I pretended to be IRIS, responding to real user questions in real-time with different voice approaches. After each session, I revealed I had been the "bot"—and stakeholders immediately saw the value of human conversation baked into automation.

The Work

Voice with Purpose

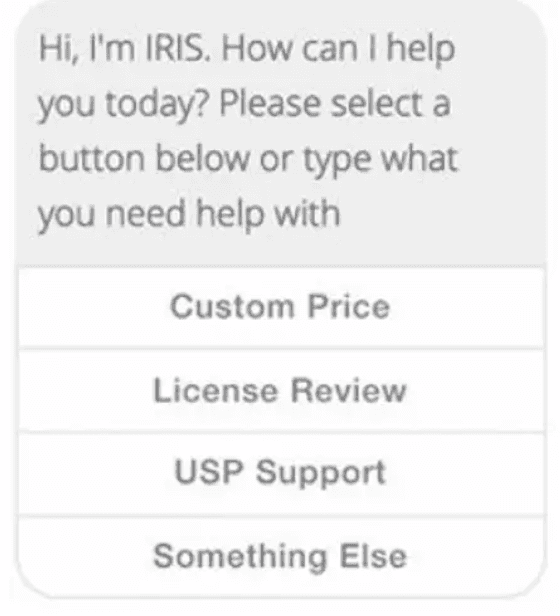

I led voice definition and content strategy across the full experience. Every prompt, fallback, and flow was crafted with clarity and empathy in mind.

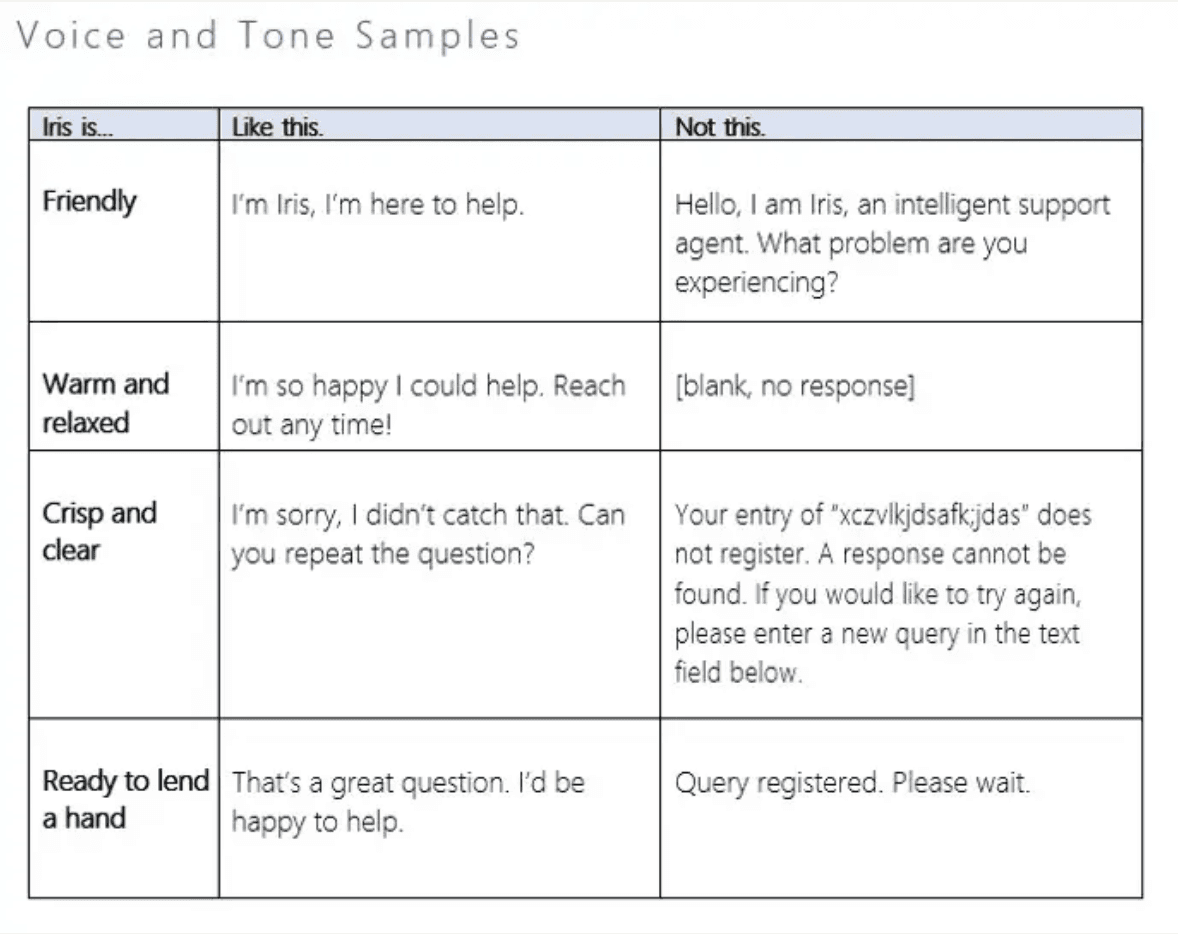

Core Voice Principles

Friendly: "I'm Iris, I'm here to help" vs. "Hello, I am Iris, an intelligent support agent"

Empathetic: "I'm so happy I could help. Reach out any time!" vs. [blank, no response]

Clear: "I'm sorry, I didn't catch that. Can you repeat the question?" vs. technical error messages

Strategic Implementation

Defined tone, UX voice, and content design with product, design, and research teams

Created modular message templates that felt natural, helpful, and brand-aligned

Designed voice variants that flexed tone based on urgency and emotional need

Built internal messaging tools and guidance to scale tone consistently

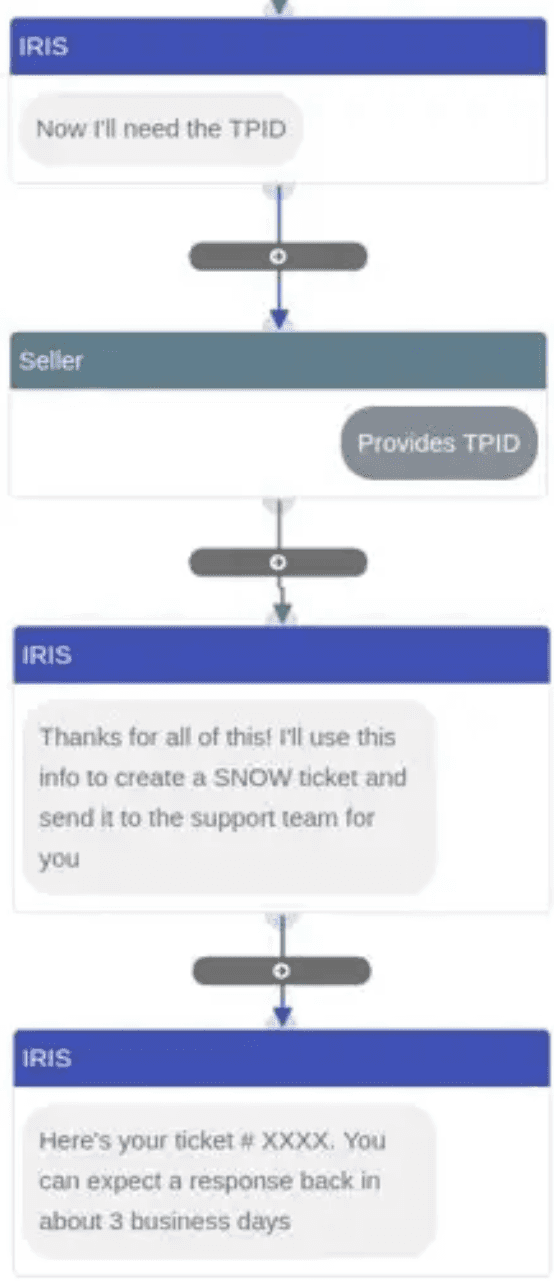

Human-Centered Conversation Flow

Built conversation paths that anticipated user emotion—from initial hesitation to successful resolution. Even included playful responses for users who wanted more genuine interaction, proving that AI could have personality without losing professionalism.

Creative Impact

-80%

reduction in internal support tickets

1

breakthrough testing methodology now used across AI projects

200+

conversation touchpoints redesigned for empathy

∞

times users said "thank you" to a chatbot named IRIS

Strategic Considerations

Implementation Approach: Wizard of Oz testing enabled rapid iteration and stakeholder buy-in before expensive technical development. Teams testing method is now used across AI projects.

Stakeholder Alignment: Demonstrated value through live interaction rather than theoretical frameworks. Converted skeptical leadership by showing, not telling.

Success Measurement: Balanced efficiency metrics (ticket reduction, resolution time) with experience quality (user satisfaction, trust scores, system adoption rates).

Collaborative Framework: Led cross-functional workshops ensuring voice strategy aligned with technical capabilities, business objectives, and user needs.

Competitive Advantage: Human-centered AI voice creates sustainable differentiation in enterprise software market where most solutions feel robotic and impersonal.

The Creative Truth

This wasn’t just a chatbot. It was proof that good technology doesn’t replace connection—it amplifies it.

When automation feels truly helpful, it becomes a brand distinction, not just a tool.